Biocluster2: Difference between revisions

mNo edit summary |

mNo edit summary |

||

| Line 10: | Line 10: | ||

==Description== | ==Description== | ||

Biocluster is the High Performance Computing (HPC) resource for the Carl R Woese Institute for Genomic Biology (IGB) at the University of Illinois at Urbana-Champaign (UIUC). Containing 2824 cores and over 27.7 TB of RAM, Biocluster has a mix of various RAM and CPU configurations on nodes to best serve the various computation needs of the IGB and the Bioinformatics community at UIUC. For storage, Biocluster has 1.3 Petabytes of storage on its GPFS filesystem for reliable high speed data transfers within the cluster. Networking in Biocluster is either 1, 10 or 40 Gigibit ethernet depending on the class of node and its data transfer needs. | Biocluster is the High Performance Computing (HPC) resource for the Carl R. Woese Institute for Genomic Biology (IGB) at the University of Illinois at Urbana-Champaign (UIUC). Containing 2824 cores and over 27.7 TB of RAM, Biocluster has a mix of various RAM and CPU configurations on nodes to best serve the various computation needs of the IGB and the Bioinformatics community at UIUC. For storage, Biocluster has 1.3 Petabytes of storage on its GPFS filesystem for reliable high speed data transfers within the cluster. Networking in Biocluster is either 1, 10 or 40 Gigibit ethernet depending on the class of node and its data transfer needs. | ||

==Cluster Specifications== | ==Cluster Specifications== | ||

| Line 86: | Line 86: | ||

* The filesystems are XFS shared over NFS. | * The filesystems are XFS shared over NFS. | ||

* To calculate usage, use the du command | * To calculate usage, use the du command | ||

| Line 102: | Line 104: | ||

!|Memory Cost ($ per GB per day) | !|Memory Cost ($ per GB per day) | ||

!|GPU Cost ($ per GPU per day) | !|GPU Cost ($ per GPU per day) | ||

| Line 116: | Line 119: | ||

||$0.10 | ||$0.10 | ||

||NA | ||NA | ||

| Line 174: | Line 178: | ||

<pre>#!/bin/bash<div><ol><li>SBATCH -p normal</li><li>SBATCH --mem=1g</li><li>SBATCH -N 1</li><li>SBATCH -n 1</li></ol></div><br | |||

<pre>#!/bin/bash<div><ol><li>SBATCH -p normal</li><li>SBATCH --mem=1g</li><li>SBATCH -N 1</li><li>SBATCH -n 1</li></ol></div><br><br>sleep 20 | |||

echo "Test Script" | echo "Test Script" | ||

</pre> | </pre> | ||

* You just created a simple SLURM Job Script. | * You just created a simple SLURM Job Script. | ||

* To submit the script to the cluster, you will use the sbatch command. | * To submit the script to the cluster, you will use the sbatch command. | ||

| Line 193: | Line 201: | ||

** '''echo "Test Script"''' - Output some text to the screen when job completes ( simulate output for this example) | ** '''echo "Test Script"''' - Output some text to the screen when job completes ( simulate output for this example) | ||

* For example if you would like to run a blast job you may simply replace the last two line with the following | * For example if you would like to run a blast job you may simply replace the last two line with the following | ||

| Line 256: | Line 266: | ||

* Use the '''srun''' commsnf if you would like to run a job interactively. | * Use the '''srun''' commsnf if you would like to run a job interactively. | ||

| Line 272: | Line 284: | ||

* To run an application with a user interface you will need to setup an Xserver on your computer [[Xserver Setup]] | * To run an application with a user interface you will need to setup an Xserver on your computer [[Xserver Setup]] | ||

* Then add the ```--x11``` parameter to your srun command | * Then add the ```--x11``` parameter to your srun command | ||

| Line 283: | Line 297: | ||

* To get a simple view of your current running jobs you may type: | * To get a simple view of your current running jobs you may type: | ||

| Line 299: | Line 315: | ||

* For more detailed view type: | * For more detailed view type: | ||

| Line 312: | Line 330: | ||

* Simple view | * Simple view | ||

| Line 329: | Line 349: | ||

* To delete a job by job-ID number: | * To delete a job by job-ID number: | ||

* You will need to use '''scancel''', for example to delete a job with ID number 5523 you would type: | * You will need to use '''scancel''', for example to delete a job with ID number 5523 you would type: | ||

| Line 339: | Line 361: | ||

</pre> | </pre> | ||

* Delete all of your jobs | * Delete all of your jobs | ||

| Line 351: | Line 375: | ||

* To view job errors in case job status shows '''Eqw''' or any other error in the status column use '''qstat -j''', for example if job # 23451 failed you would type: | * To view job errors in case job status shows '''Eqw''' or any other error in the status column use '''qstat -j''', for example if job # 23451 failed you would type: | ||

| Line 377: | Line 403: | ||

* To use an application, you need to use the '''module''' command to load the settings for an application | * To use an application, you need to use the '''module''' command to load the settings for an application | ||

* To load a particular environment for example QIIME/1.9.1, simply run this command: | * To load a particular environment for example QIIME/1.9.1, simply run this command: | ||

| Line 387: | Line 415: | ||

</pre> | </pre> | ||

* If you would like to simply load the latest version, run the the command without the /1.9.1 (version number): | * If you would like to simply load the latest version, run the the command without the /1.9.1 (version number): | ||

| Line 397: | Line 427: | ||

</pre> | </pre> | ||

* To view which environments you have loaded simply run '''module list''': | * To view which environments you have loaded simply run '''module list''': | ||

| Line 410: | Line 442: | ||

* When submitting a job using a sbatch script you will have to add the '''module load qiime/1.5.0''' line before running qiime in the script. | * When submitting a job using a sbatch script you will have to add the '''module load qiime/1.5.0''' line before running qiime in the script. | ||

* To unload a module simply run '''module unload''': | * To unload a module simply run '''module unload''': | ||

| Line 420: | Line 454: | ||

</pre> | </pre> | ||

* Unload all modules | * Unload all modules | ||

| Line 438: | Line 474: | ||

* We have a local mirror of the [https://cran.r-project.org/ CRAN] and [https://www.bioconductor.org/ Bioconductor]. This allows you to install packages through an interactive session into your home folder. | * We have a local mirror of the [https://cran.r-project.org/ CRAN] and [https://www.bioconductor.org/ Bioconductor]. This allows you to install packages through an interactive session into your home folder. | ||

* To install a package, run an interactive | * To install a package, run an interactive | ||

| Line 446: | Line 484: | ||

</pre> | </pre> | ||

* Load the R module | * Load the R module | ||

| Line 454: | Line 494: | ||

</pre> | </pre> | ||

* Run R | * Run R | ||

| Line 463: | Line 505: | ||

</pre> | </pre> | ||

* For CRAN packages, run install.packages() | * For CRAN packages, run install.packages() | ||

| Line 471: | Line 515: | ||

</pre> | </pre> | ||

* For Bioconductor packages, the BiocManager package is already installed. You just need to run BiocManager::install to install a package | * For Bioconductor packages, the BiocManager package is already installed. You just need to run BiocManager::install to install a package | ||

| Line 491: | Line 537: | ||

* Once installed Run WinSCP >> enter biologin.igb.illinois.edu for the Host name >> Enter your IGB user name and password and click Login | * Once installed Run WinSCP >> enter biologin.igb.illinois.edu for the Host name >> Enter your IGB user name and password and click Login | ||

[[File:Bioclustertransfer.png|400px]] | [[File:Bioclustertransfer.png|400px]] | ||

| Line 508: | Line 556: | ||

* From the drop down menu at the top of the opopup window select '''SFTP(SSH File Transfer Protocol)''' | * From the drop down menu at the top of the opopup window select '''SFTP(SSH File Transfer Protocol)''' | ||

[[File:Cyberduck screenshot sftp.png|400px]] | [[File:Cyberduck screenshot sftp.png|400px]] | ||

<p> | |||

<ul><li>Now in the '''Server:''' input box enter '''biologin.igb.illinois.edu''' and for Username and password enter your IGB credentials.</li></ul> | <ul><li>Now in the '''Server:''' input box enter '''biologin.igb.illinois.edu''' and for Username and password enter your IGB credentials.</li></ul> | ||

[[File:Cyberduckbiologin.png|400px]] | |||

[[File:Cyberduckbiologin.png|400px]]<div class="mw-parser-output"><a class="image"><img src="/images/thumb/9/9e/Cyberduckbiologin.png/400px-Cyberduckbiologin.png" srcset="/images/thumb/9/9e/Cyberduckbiologin.png/600px-Cyberduckbiologin.png 1.5x, /images/thumb/9/9e/Cyberduckbiologin.png/800px-Cyberduckbiologin.png 2x" alt="Cyberduckbiologin.png" width="400" height="237" /></a></div> | </p><div class="mw-parser-output"><a class="image"><img src="/images/thumb/9/9e/Cyberduckbiologin.png/400px-Cyberduckbiologin.png" srcset="/images/thumb/9/9e/Cyberduckbiologin.png/600px-Cyberduckbiologin.png 1.5x, /images/thumb/9/9e/Cyberduckbiologin.png/800px-Cyberduckbiologin.png 2x" alt="Cyberduckbiologin.png" width="400" height="237" /></a></div><p> | ||

<ul><li>Click '''Connect.'''</li><li>You may now download or transfer your files.</li><li>'''NOTICE:''' Cyberduck by default wants to open multiple connections for transferring files. The biocluster firewall limits you to 4 connections a minute. This can cause transfers to timeout. You can change Cyberduck to only use 1 connection by going to '''Preferences->Transfers->Transfer Files'''. Select '''Open Single Connection'''.</li></ul> | <ul><li>Click '''Connect.'''</li><li>You may now download or transfer your files.</li><li>'''NOTICE:''' Cyberduck by default wants to open multiple connections for transferring files. The biocluster firewall limits you to 4 connections a minute. This can cause transfers to timeout. You can change Cyberduck to only use 1 connection by going to '''Preferences->Transfers->Transfer Files'''. Select '''Open Single Connection'''.</li></ul> | ||

<h3>Transferring using Globus</h3> | <h3>Transferring using Globus</h3> | ||

<ul><li>The biocluster has a Globus endpoint setup. Then end point name is '''igb#biocluster.igb.illinois.edu'''</li><li>Globus allows the transferring of very large files reliably.</li><li>A guide on how to use Globus is [[Globus|here]]</li></ul> | <ul><li>The biocluster has a Globus endpoint setup. Then end point name is '''igb#biocluster.igb.illinois.edu'''</li><li>Globus allows the transferring of very large files reliably.</li><li>A guide on how to use Globus is [[Globus|here]]</li></ul> | ||

<h3>Transferring from Biotech FTP Server</h3> | <h3>Transferring from Biotech FTP Server</h3> | ||

<ul><li>One option to transfer data from the Biotech FTP server is to use a program called sftp.</li><li>It can download 1 file or an entire directory.</li><li>Replace USERNAME with the username provided by the Biotech Center.</li></ul> | <ul><li>One option to transfer data from the Biotech FTP server is to use a program called sftp.</li><li>It can download 1 file or an entire directory.</li><li>Replace USERNAME with the username provided by the Biotech Center.</li></ul> | ||

The below example will download the file '''test_file.tar.gz''' | |||

<pre>sftp USERNAME@ftp.biotech.illinois.edu:test_file.tar.gz</pre><ul><li>If you want to download an entire directory, you need to have the '''-r''' parameter set. This will recursively go through an entire directory and download it into the current directory. Make sure to have the final dot at the end of the command</li></ul> | <pre>sftp USERNAME@ftp.biotech.illinois.edu:test_file.tar.gz</pre><ul><li>If you want to download an entire directory, you need to have the '''-r''' parameter set. This will recursively go through an entire directory and download it into the current directory. Make sure to have the final dot at the end of the command</li></ul> | ||

| Line 539: | Line 580: | ||

<pre>sftp -r USERNAME@ftp.biotech.illinois.edu:test_dir/ .</pre> | <pre>sftp -r USERNAME@ftp.biotech.illinois.edu:test_dir/ .</pre> | ||

<h2>References</h2> | <h2>References</h2> | ||

<ul><li>OpenHPC [https://openhpc.community/ https://openhpc.community/]</li><li>SLURM Job Scheduler Documentation - [https://slurm.schedmd.com/ https://slurm.schedmd.com/]</li><li>Rosetta Stone of Schedulers - [https://slurm.schedmd.com/rosetta.pdf https://slurm.schedmd.com/rosetta.pdf]</li><li>SLURM Quick Refernece - [https://slurm.schedmd.com/pdfs/summary.pdf https://slurm.schedmd.com/pdfs/summary.pdf]</li><li>CEPH Filesystem [http://ceph.com/ceph-storage/file-system/ http://ceph.com/ceph-storage/file-system/]</li><li>GPFS Filesystem [https://en.wikipedia.org/wiki/IBM_Spectrum_Scale https://en.wikipedia.org/wiki/IBM_Spectrum_Scale]</li><li>Lmod Module Homepage - [https://www.tacc.utexas.edu/research-development/tacc-projects/lmod https://www.tacc.utexas.edu/research-development/tacc-projects/lmod]</li><li>Lmod Documentation - [https://lmod.readthedocs.io/en/latest/ https://lmod.readthedocs.io/en/latest/]</li></ul> | <ul><li>OpenHPC [https://openhpc.community/ https://openhpc.community/]</li><li>SLURM Job Scheduler Documentation - [https://slurm.schedmd.com/ https://slurm.schedmd.com/]</li><li>Rosetta Stone of Schedulers - [https://slurm.schedmd.com/rosetta.pdf https://slurm.schedmd.com/rosetta.pdf]</li><li>SLURM Quick Refernece - [https://slurm.schedmd.com/pdfs/summary.pdf https://slurm.schedmd.com/pdfs/summary.pdf]</li><li>CEPH Filesystem [http://ceph.com/ceph-storage/file-system/ http://ceph.com/ceph-storage/file-system/]</li><li>GPFS Filesystem [https://en.wikipedia.org/wiki/IBM_Spectrum_Scale https://en.wikipedia.org/wiki/IBM_Spectrum_Scale]</li><li>Lmod Module Homepage - [https://www.tacc.utexas.edu/research-development/tacc-projects/lmod https://www.tacc.utexas.edu/research-development/tacc-projects/lmod]</li><li>Lmod Documentation - [https://lmod.readthedocs.io/en/latest/ https://lmod.readthedocs.io/en/latest/]</li></ul> | ||

</p> | |||

Revision as of 07:45, 24 July 2020

Quick Links

- Main Site - http://biocluster2.igb.illinois.edu

- Request Account - http://www.igb.illinois.edu/content/biocluster-account-form

- Cluster Accounting - https://biocluster2.igb.illinois.edu/accounting/

- Cluster Monitoring - http://biocluster2.igb.illinois.edu/ganglia/

- SLURM Script Generator - http://www-app2.igb.illinois.edu/tools/slurm/

- Biocluster Applications - https://help.igb.illinois.edu/Biocluster_Applications

Description

Biocluster is the High Performance Computing (HPC) resource for the Carl R. Woese Institute for Genomic Biology (IGB) at the University of Illinois at Urbana-Champaign (UIUC). Containing 2824 cores and over 27.7 TB of RAM, Biocluster has a mix of various RAM and CPU configurations on nodes to best serve the various computation needs of the IGB and the Bioinformatics community at UIUC. For storage, Biocluster has 1.3 Petabytes of storage on its GPFS filesystem for reliable high speed data transfers within the cluster. Networking in Biocluster is either 1, 10 or 40 Gigibit ethernet depending on the class of node and its data transfer needs.

Cluster Specifications

| Queue Name | Nodes | Cores (CPUs) per Node | Memory | Networking | Scratch Space /scatch | GPUs |

|---|---|---|---|---|---|---|

| normal (default) | 5 Supermicro SYS-2049U-TR4 | 72 Intel Xeon Gold 6150 @2.7 GHz | 1.2TB | 40GB Ethernet | 7TB SSD | |

| lowmem | 8 Supermicro SYS-F618R2-RTN+ | 12 Intel Xeon E5-2603 v4 @ 1.70Ghz | 64GB RAM | 10GB Ethernet | 192GB | |

| gpu | 1 Supermicro | 28 Intel Xeon E5-2680 @ 2.4Ghz | 128GB | 1GB Ethernet | 1TB | 4 NVIDIA GeForce GTX 1080 Ti |

| classroom | 10 Dell Poweredge R620 | 24 Intel Xeon E5-2697 v2 @ 2.7Ghz | 384GB | 10GB Ethernet | 750GB |

Storage

Information

- The storage system is a GPFS filesystem with 1.3 Petabytes of total disk space with 2 copies of the data. This data is NOT backed up.

- The data is spread across 8 GPFS storage nodes.

Cost

| Cost (Per Terabyte Per Month) |

|---|

| $10 |

Calculate Usage (/home)

- Each Month, you will receive a bill on your monthly usage. We take a snapspot of usage daily. Then we average out the 95 percentile of daily snapspots to get an average usage for the month.

- You can calculate your usage using the du command. An example is below. The result will be double what you are billed as their is 2 copies of the data. Make sure to divide by 2.

du -h /home/a-m/username

Calculate Usage (/private_stores)

- These are private data storage nodes. They do not get billed monthly.

- The filesystems are XFS shared over NFS.

- To calculate usage, use the du command

du -h /private_stores/shared/directory

Queue Costs

The cost for each job is dependent on which queue it is submitted to. Listed below are the different queues on the cluster with their cost.

Usage is charge by the second. The CPU cost and memory cost are compared and the largest is what is billed.

| Queue Name | CPU Cost ($ per CPU per day) | Memory Cost ($ per GB per day) | GPU Cost ($ per GPU per day)

|

|---|---|---|---|

| normal (default) | $1.00 | $0.07 | NA |

| lowmem | $0.50 | $0.10 | NA

|

| GPU | $2.00 | $0.44 | $2.00 |

Gaining Access

- Please fill out the form at http://www.igb.illinois.edu/content/biocluster-account-form to request access to the Biocluster.

Cluster Rules

- Running jobs on the head node or login nodes are strictly prohibited. Running jobs on the head node could cause the entire cluster to crash and affect everyone's jobs on the cluster. Any program found to be running on the headnode will be stopped immediately and your account could be locked. You can start an interactive session to login to a node to manual run programs.

- Installing Software Please email help@igb.illinois.edu for any software requests. Compiled software will be installed in /home/apps. If its a standard RedHat package (rpm), it will be installed in their default locations on the nodes.

- Creating or Moving over Programs: Programs you create or move to the cluster should be first tested by you outside the cluster for stability. Once your program is stable, then it can be moved over to the cluster for use. Unstable programs that cause problems with the cluster can result in your account being locked. Programs should only be added by CNRG personnel and not compiled in your home directory.

- Reserving Memory: SLURM allows the user to specify the amount of memory they want their program to use.. If your job tries to use more memory than you have reserved, the job will run out of memory and die. Make sure to specify the correct amount of memory.

- Reserving Nodes and Processors: For each job, you must reserve the correct number of nodes and processors. By default you are reserved 1 processor on 1 node. If you are running a multiple processor job or a MPI job you need to reserve the appropriate amount. If you do not reserve the correct amount, the cluster will confine your job to that limit, increasing its runtime.

How To Log Into The Cluster

- You will need to use an SSH client to connect.

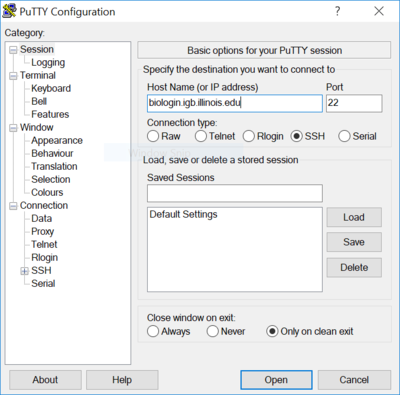

On Windows

- You can download Putty from http://www.chiark.greenend.org.uk/~sgtatham/putty/download.html

- Install Putty and run it, in the Host Name input box enter biologin.igb.illinois.edu

- Hit Open and login using your IGB account credentials.

On Mac OS X

- Simply open the terminal under Go >> Utilities >> Terminal

- Type in ssh username@biologin.igb.illinois.edu where username is your NetID.

- Hit the Enter key and type in your IGB password.

How To Submit A Cluster Job

- The cluster runs the SLURM queuing and resource mangement program.

- All jobs are submitted to SLURM which distributes them automatically to the Nodes.

- You can find all of the parameters that SLURM uses at https://slurm.schedmd.com/quickstart.html

- You can use our SLURM Generation Utility to help you learn to generate job scripts http://www-app2.igb.illinois.edu/tools/slurm/

Create a Job Script

- You must first create a SLURM job script file in order to tell SLURM how and what to execute on the nodes.

- Type the following into a text editor and save the file test.sh ( Linux text editing )

#!/bin/bash<div><ol><li>SBATCH -p normal</li><li>SBATCH --mem=1g</li><li>SBATCH -N 1</li><li>SBATCH -n 1</li></ol></div><br><br>sleep 20 echo "Test Script"

- You just created a simple SLURM Job Script.

- To submit the script to the cluster, you will use the sbatch command.

sbatch test.sh

- Line by line explanation

- #!/bin/bash - tells linux this is a bash program and it should use a bash interpreter to execute it.

- #SBATCH - are SLURM parameters, for explanations of these please scroll down to SLURM Parameters Explanations section.

- sleep 20 - Sleep 20 seconds (only used to simulate processing time for this example)

- echo "Test Script" - Output some text to the screen when job completes ( simulate output for this example)

- For example if you would like to run a blast job you may simply replace the last two line with the following

module load BLAST blastall -p blastn -d nt -i input.fasta -e 10 -o output.result -v 10 -b 5 -a 5

- Note: the module commands are explained under the Environment Modules section.

SLURM Parameters Explanations:

- To view all possible parameters

- Run man sbatch at the command line

- Go to https://slurm.schedmd.com/sbatch.html to view the man page online

| Command | Description |

|---|---|

| #SBATCH -p PARTITION | Run the job on a specific queue/partition. This defaults to the "normal" queue |

| #SBATCH -D /tmp/working_dir | Run the script from the /tmp/working_dir directory. This defaults to the current directory you are in. |

| #SBATCH -J ExampleJobName | Name of the job will be ExampleJobName |

| #SBATCH -e /path/to/errorfile | Split off the error stream to this file. By default output and error streams are placed in the same file. |

| #SBATCH -o /path/to/ouputfile | Split off the output stream to this file. By default output and error streams are placed in the same file. |

| #SBATCH --mail-user username@illinois.edu | Send an e-mail to specified email to receive job information. |

| #SBATCH --mail-type BEGIN, END, FAIL | Specifies when to send a message to email. You can select multiple of these with a comma separated list. Many other options exist. |

| #SBATCH -N X | Reserve X number of nodes. |

| #SBATCH -n X | Reserve X number of cpus. |

| #SBATCH --mem=XG | Reserve X gigabytes of RAM for the job. |

| #SBATCH --gres=gpu:X | Reserve X NVIDIA GPUs. (Only on GPU queues) |

Create a Job Array Script

Making a new copy of the script and then submitting each one for every input data file is time consuming. An alternative is to make a job array using the -t option in your submission script. The ---aray option allows many copies of the same script to be queued all at once. You can use the $SLURM_ARRAY_TASK_ID to differentiate between the different jobs in the array. A detailed example on how to do this is available at Job Arrays

Start An Interactive Session

- Use the srun commsnf if you would like to run a job interactively.

srun --pty /bin/bash

- This will automatically reserve you a slot on one of the compute nodes and will start a terminal session on it.

- Closing your terminal window will also kill your processes running in your interactive srun session, therefore it's better to submit large jobs via non-interactive sbatch.

X11 Graphical Applications

- To run an application with a user interface you will need to setup an Xserver on your computer Xserver Setup

- Then add the ```--x11``` parameter to your srun command

srun --x11 --pty /bin/bash

View/Delete Submitted Jobs

Viewing Job Status

- To get a simple view of your current running jobs you may type:

squeue -u userid

- This command brings up a list of your current running jobs.

- The first number represents the job's ID number.

- Jobs may have different status flags:

- R = job is currently running

- For more detailed view type:

squeue -l

- This will return a list of all nodes, their slot availability, and your current jobs.

List Queues

- Simple view

sinfo

This will show all queues as well as which nodes in those queues are fully used (alloc), partially used (mix), unused (idle), or unavailable (down).

List All Jobs on Cluster With Nodes

squeue

Deleting Jobs

- Note: You can only delete jobs which are owned by you.

- To delete a job by job-ID number:

- You will need to use scancel, for example to delete a job with ID number 5523 you would type:

scancel 5523

- Delete all of your jobs

scancel -u userid

Troubleshooting job errors

- To view job errors in case job status shows Eqw or any other error in the status column use qstat -j, for example if job # 23451 failed you would type:

scontrol show job 23451

Applications

Application Lists

- View a list of installed applications at https://help.igb.illinois.edu/Biocluster_Applications

- List of currently installed applications from the commmand line, run module avail

Application Installation

- Please email help@igb.illinois.edu to request new application or version upgrades

- The Biocluster uses EasyBuild to build and install software. You can read more about EasyBuild at https://github.com/easybuilders/easybuild

- The Biocluster EasyBuild scripts are located at https://github.com/IGB-UIUC/easybuild

Environment Modules

- The Biocluster uses the Lmod modules package to manage the software that is installed. You can read more about Lmod at https://lmod.readthedocs.io/en/latest/

- To use an application, you need to use the module command to load the settings for an application

- To load a particular environment for example QIIME/1.9.1, simply run this command:

module load QIIME/1.9.1

- If you would like to simply load the latest version, run the the command without the /1.9.1 (version number):

module load QIIME

- To view which environments you have loaded simply run module list:

bash-4.1$ module list Currently Loaded Modules: 1) BLAST/2.2.26-Linux_x86_64 2) QIIME/1.9.1

- When submitting a job using a sbatch script you will have to add the module load qiime/1.5.0 line before running qiime in the script.

- To unload a module simply run module unload:

module unload QIIME

- Unload all modules

module purge

Containers

- The Biocluster cluster supports Singularity to run containers.

- The guide on how to use them is at Biocluster Singularity

R Packages

- We have a local mirror of the CRAN and Bioconductor. This allows you to install packages through an interactive session into your home folder.

- To install a package, run an interactive

srun --pty /bin/bash

- Load the R module

module load R/3.6.0-IGB-gcc-8.2.0

- Run R

R

- For CRAN packages, run install.packages()

install.packages('shape');

- For Bioconductor packages, the BiocManager package is already installed. You just need to run BiocManager::install to install a package

BiocManager::install('dada2')

- If the package requires an external dependencies, you should email us to get it install centrally.

Mirror Service - Genomic Databases

Transferring data files

Transferring using SFTP/SCP

Using WinSCP

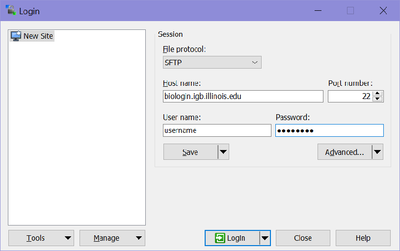

- Download WinSCP installation package from http://winscp.net/eng/download.php#download2 and install it.

- Once installed Run WinSCP >> enter biologin.igb.illinois.edu for the Host name >> Enter your IGB user name and password and click Login

- Once you hit "Login, you should be connected to your Biocluster home folder, as shown below.

- From here you should be able to download or transfer your files.

Using CyberDuck

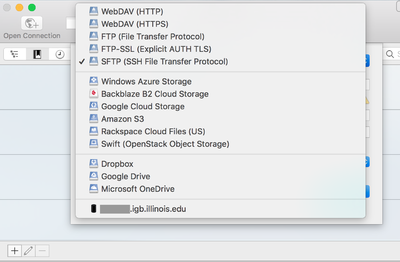

- To download cyberduck go to http://cyberduck.c and click on the large Zip icon to download.

- Once cyberduck is installed on OSX you may start the program.

- Click on Open Connection.

- From the drop down menu at the top of the opopup window select SFTP(SSH File Transfer Protocol)

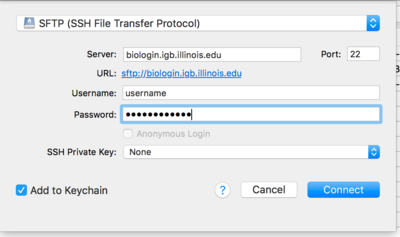

- Now in the Server: input box enter biologin.igb.illinois.edu and for Username and password enter your IGB credentials.

- Click Connect.

- You may now download or transfer your files.

- NOTICE: Cyberduck by default wants to open multiple connections for transferring files. The biocluster firewall limits you to 4 connections a minute. This can cause transfers to timeout. You can change Cyberduck to only use 1 connection by going to Preferences->Transfers->Transfer Files. Select Open Single Connection.

Transferring using Globus

- The biocluster has a Globus endpoint setup. Then end point name is igb#biocluster.igb.illinois.edu

- Globus allows the transferring of very large files reliably.

- A guide on how to use Globus is here

Transferring from Biotech FTP Server

- One option to transfer data from the Biotech FTP server is to use a program called sftp.

- It can download 1 file or an entire directory.

- Replace USERNAME with the username provided by the Biotech Center.

The below example will download the file test_file.tar.gz

sftp USERNAME@ftp.biotech.illinois.edu:test_file.tar.gz

- If you want to download an entire directory, you need to have the -r parameter set. This will recursively go through an entire directory and download it into the current directory. Make sure to have the final dot at the end of the command

sftp -r USERNAME@ftp.biotech.illinois.edu:test_dir/ .

References

- OpenHPC https://openhpc.community/

- SLURM Job Scheduler Documentation - https://slurm.schedmd.com/

- Rosetta Stone of Schedulers - https://slurm.schedmd.com/rosetta.pdf

- SLURM Quick Refernece - https://slurm.schedmd.com/pdfs/summary.pdf

- CEPH Filesystem http://ceph.com/ceph-storage/file-system/

- GPFS Filesystem https://en.wikipedia.org/wiki/IBM_Spectrum_Scale

- Lmod Module Homepage - https://www.tacc.utexas.edu/research-development/tacc-projects/lmod

- Lmod Documentation - https://lmod.readthedocs.io/en/latest/